Hearing (or audition) is the sense of detecting sound, that is, receiving information about the environment from vibratory movement communicated through a medium such as air, water, or ground. It is one of the traditional five senses, along with sight, touch, smell, and taste.

Both vertebrates and arthropods have a sense of hearing. In humans and other vertebrates, hearing is performed primarily by the auditory system: Sound is detected by the ear and transduced into nerve impulses that are perceived by the brain.

For animals, hearing is a fundamentally important adaptation for survival, maintenance, and reproduction. For example, it is integral for communication within the species, such as in the mating calls of katydids; for defense—when a deer's sense of hearing provides warning of approaching predators; and for securing nutrition—when a fox's sense of hearing helps it locate its prey. The sense of hearing serves as one half of an essential communication loop when it helps colonial birds flock together and when a penguin recognizes the unique call of its mate or offspring and follows that call to locate the relative.

Humans attach additional values to the sense of hearing when it helps them relate to others and to nature. The sense of hearing is doubly important for harmonized relations of giving and receiving: on the one hand, a person may perform music or speak one's own thoughts and emotions to be heard by others, and on the other hand a person may hear either music, the thoughts and emotions of others expressed by their speech, or the sounds of nature.

Hearing is integral to a fully lived human life, yet humans born deaf or who lose the sense of hearing while young and who receive loving care and appropriate training can learn sign language, which is "spoken" with constantly changing movements and orientations of the hands, head, lips, and body and converse readily with others who "speak" the same language. Hundreds of different sign languages are in use throughout the world as local deaf cultures have each developed their own language.

Overview

Hearing is a sense—that is, a mechanism or faculty by which a living organism receives information about its external or internal environment. In other words, it is an inherent capability or power to receive and process stimuli from outside and inside the body, similar to the sense of sight. The term, "sense," is often more narrowly defined as related to higher animals. In this case, a sense is considered a system involving sensory cells that respond to a specific kind of physical energy (both internal and external stimuli), which are converted into nerve impulses that travel to the brain (typically a specialized area), where the signals are received and analyzed.

Although school children are routinely taught that there are five senses (sight, hearing, touch, smell, taste; a classification first devised by Aristotle), a broader schema presents these five external senses as being complemented by four internal senses (pain, balance, thirst, and hunger), with a minimum of two more senses being observed in some other organisms.

Hearing is the ability to perceive sound from a source outside the body through an environmental medium. The cause of sound is vibratory movement from a disturbance, communicated to the hearing apparatus through an environmental medium, such as air. Scientists group all such vibratory phenomena under the general category of "sound," even when they lie outside the range of human hearing.

Solids, liquids, and gases are all capable of transmitting sound. Sound is transmitted by means of sound waves. In air, a sound wave is a disturbance that creates a region of high pressure (compression) followed by one of low pressure (rarefaction). These variations in pressure are transferred to adjacent regions of the air in the form of a spherical wave radiating outward from the disturbance. Sound is therefore characterized by the properties of waves, such as frequency, wavelength, period, amplitude, and velocity (or speed).

Hearing functions to detect the presence of sound, as well as to identify the location and type of sound, and its characteristics (whether it is getting louder or softer, for instance). Humans and many animals use their ears to hear sound, but loud sounds and low-frequency sounds can be perceived by other parts of the body as well, through the sense of touch.

Hearing in animals

Not all sounds are normally audible to all animals. Each species has a range of normal hearing for both loudness (amplitude) and pitch (frequency). Many animals use sound in order to communicate with each other and hearing in these species is particularly important for survival and reproduction. In species using sound as a primary means of communication, hearing is typically most acute for the range of pitches produced in calls and speech.

Frequencies capable of being heard by humans are called audio, or sonic. Frequencies higher than audio are referred to as ultrasonic, while frequencies below audio are referred to as infrasonic. Some bats use ultrasound for echo location while in flight. Dogs are able to hear ultrasound, which is the principle of "silent" dog whistles. Snakes sense infrasound through their bellies, and whales, giraffes, and elephants use it for communication.

As with other vertebrates, fish have an inner ear to detect sound, although through the medium of water. Fish, larval amphibians, and some adult amphibians that live in water also have a lateral line system arranged on or under the skin that functions somewhat like a sense of hearing, but also like a sense of touch. The lateral line system is a set of sense organs that also have connections in the brain with the nerve pathways from the auditory system of the inner ear, but it is a different system (Lagler et al. 1962). It responds to a variety of stimuli, and in some fish has been shown to respond to irregular pressure waves and low-frequency vibrations, but it is also involved in "distant touch" location of objects (Lagler et al. 1962).

The physiology of hearing in vertebrates is not yet fully understood. The molecular mechanism of sound transduction within the cochlea and the processing of sound by the brain, (the auditory cortex) are two areas that remain largely unknown.

Hearing in humans

Humans can generally hear sounds with frequencies between 20 Hz and 20 kHz, that is, between 20 and 20,000 cycles per second (hertz (Hz)). Human hearing is able to discriminate small differences in loudness (intensity) and pitch (frequency) over that large range of audible sound. This healthy human range of frequency detection varies from one individual to the next, and varies significantly with age, occupational hearing damage, and gender. Some individuals (particularly women) are able to hear pitches up to 22 kHz and perhaps beyond, while other people are limited to about 16 kHz. The ear is most sensitive to frequencies around 3,500 Hz. Sounds above 20,000 Hz are classified as ultrasound; sounds below 20 Hz, as infrasound. The ability of most adults to hear sounds above about 8 kHz begins to deteriorate in early middle age (Vitello 2006).

The amplitude of a sound wave is specified in terms of its pressure, measured in pascal (Pa) units. As the human ear can detect sounds with a very wide range of amplitudes, sound pressure is often reported in terms of what is called the sound pressure level (SPL) on a logarithmic decibel (dB) scale. The zero point of the decibel scale is commonly set by referencing the amplitude of the quietest sounds that humans can hear. In air, that sound wave amplitude is approximately 20 μPa (micropascals), which give the setting of the sound pressure level at 0 dB re 20 μPa (often incorrectly abbreviated as 0 dB SPL). (When using sound pressure levels, it is important to always quote the reference sound pressure used. Commonly used reference sound pressures are 20 µPa in air and 1 µPa in water.)

Prolonged exposure to a sound pressure level exceeding 85 dB can permanently damage the ear, sometimes resulting in tinnitus and hearing impairment. Sound levels in excess of 130 dB are considered above what the human ear can withstand and may result in serious pain and permanent damage. At very high amplitudes, sound waves exhibit nonlinear effects, including shock.

Like touch, audition requires sensitivity to the movement of molecules in the world outside the organism. Both hearing and touch are types of mechanosensation (Kung 2005). [1]

Mechanism

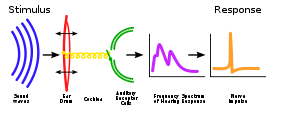

Human hearing takes place by a complex mechanism involving the transformation of sound waves by the combined operation of the outer ear, middle ear, and inner ear into nerve impulses transmitted to the appropriate part of the brain.

Outer ear

The visible portion of the outer ear in humans is called the auricle, or the pinna. It is a convoluted cup that arises from the opening of the ear canal on either side of the head. The auricle helps direct sound to the ear canal. Both the auricle and the ear canal amplify and guide sound waves to the tympanic membrane, or eardrum.

In humans, amplification of sound ranges from 5 to 20 dB for frequencies within the speech range (about 1.5–7 kHz). Since the shape and length of the human external ear preferentially amplifies sound in the speech frequencies, the external ear also improves the signal to noise ratio for speech sounds (Brugge and Howard 2002).

Middle ear

The eardrum is stretched across the outer side of a bony, air-filled cavity called the middle ear. Just as the tympanic membrane is like a drum head, the middle ear cavity is like a drum body.

Much of the middle ear's function in hearing has to do with processing sound waves in air surrounding the body into the vibrations of fluid within the cochlea of the inner ear. Sound waves move the tympanic membrane, which moves the ossicles (a set of tiny bones in the middle ear) which move the fluid of the cochlea.

Inner ear

The cochlea is a snail-shaped, fluid-filled chamber, divided along almost its entire length by a membranous partition. The cochlea propagates mechanical signals from the middle ear as waves in fluid and membranes, and then transduces them to nerve impulses, which are transmitted to the brain. It is also responsible for the sensations of balance and motion.

Central auditory system

This sound information, now re-encoded, travels down the auditory nerve, through parts of the brainstem (for example, the cochlear nucleus and inferior colliculus), further processed at each way point. The information eventually reaches the thalamus, and from there it is relayed to the cortex. In the human brain, the primary auditory cortex is located in the temporal lobe. This central auditory system (CAS) is solely responsible for the decision making in the ear as far as the pitch and frequency are concerned. When one covers her ears from a loud noise, the CAS provides the warning to do so.

Representation of loudness, pitch, and timbre

Nerves transmit information through discrete electrical impulses known as "action potentials." As the loudness of a sound increases, the rate of action potentials in the auditory nerve fiber increases. Conversely, at lower sound intensities (low loudness), the rate of action potentials is reduced.

Different repetition rates and spectra of sounds, that is, pitch and timbre, are represented on the auditory nerve by a combination of rate-versus-place and temporal-fine-structure coding. That is, different frequencies cause a maximum response at different places along the organ of Corti, while different repetition rates of low enough pitches (below about 1500 Hz) are represented directly by repetition of neural firing patterns (known also as volley coding).

Loudness and duration of sound (within small time intervals) may also influence pitch to a small extent. For example, for sounds higher than 4000 Hz, as loudness increases, the perceived pitch also increases.

Localization of sound

The ability to estimate just where a sound is coming from, sound localization, is dependent on hearing ability of each of the two ears, and the exact quality of the sound. Since each ear lies on an opposite side of the head, a sound will reach the closest ear first, and its amplitude will be larger in that ear.

The shape of the pinna (outer ear) and of the head itself result in frequency-dependent variation in the amount of attenuation that a sound receives as it travels from the sound source to the ear. Furthermore, this variation depends not only on the azimuthal angle of the source, but also on its elevation. This variation is described as the head-related transfer function, or HRTF. As a result, humans can locate sound both in azimuth and altitude. Most of the brain's ability to localize sound depends on interaural (between ears) intensity differences and interaural temporal, or phase, differences. In addition, humans can also estimate the distance that a sound comes from, based primarily on how reflections in the environment modify the sound, for example, as in room reverberation.

Human echolocation is a technique used by some blind humans to navigate within their environment by listening for echoes of clicking or tapping sounds that they emit.

Hearing and language

Human beings develop spoken language within the first few years of life, and the ability to hear is central to this learning process. Gaining literacy generally depends on understanding speech. In the great majority of written languages, the sound of the word is coded in symbols, so hearing is important for learning to read the written word. Listening also plays an important role in learning a second language.

Hearing disability - Deafness

Deafness, a lack of the sense of hearing, may exist from birth or be acquired after birth. It can be a serious impediment to full socialization and development of a mature sense of identity unless special measures are taken.

Causes

The causes of deafness and hard of hearing in newborns, children, and youth in the U.S. according to the Gallaudet Research Institute's Annual Survey: 2004-2005 Regional and National Summary are:

- Genetic/Hereditary/Familial—23 percent

- Pregnancy Related—12 percent

- Post-birth Disease/Injury—15 percent

- Undetermined cause—50 percent

Among the genetic causes, three named sydromes—Down, CHARGE (a craniofacial disorder), and Waardenburg (a disorder causing unusual physical features)—accounted together for 18 percent of the cases, while no other genetic cause accounted for much more than 3 percent of the cases. The most common pregnancy-related causes were premature birth, other complications of pregnancy, and Cytomegalovirus (CMV), a common virus that can be passed on to the unborn child by a mother infected with it. Among the Post-birth Disease/Injury category the most common causes were otitis media (inflammation of the middle ear), and meningitis (inflammation of the protective membranes of the central nervous system).[2]

Consequences

Hearing impairment can inhibit not only learning to understand the spoken word, but also learning to speak and read. By the time it is apparent that a severely hearing impaired (deaf) child has a hearing deficit, problems with communication may have already caused issues within the family and hindered social skills, unless the child is part of a deaf community where sign language is used instead of spoken language. In many developed countries, hearing is evaluated during the newborn period in an effort to prevent the inadvertent isolation of a deaf child in a hearing family.

Although an individual who hears and learns to speak and read will retain the ability to read even if hearing becomes too impaired to hear voices, a person who never heard well enough to learn to speak is rarely able to read proficiently (Morton and Nance 2006). Most evidence points to early identification of hearing impairment as key if a child with very insensitive hearing is to learn spoken language and proficient reading.

Spiritual hearing

Perceiving incorporeal things of an auditory nature would be considered spiritual hearing. (In a broader sense, the term spiritual hearing may refer to listening to one's inner voice, conscience, or intuition.) Synesthesia, or a mixing of senses, also relates to this phenomena, such as an individual hearing colors or seeing sounds. For example, composer Franz Liszt claimed to see colors when hearing musical notes.

The term "sense" refers to a mechanism or faculty by which a living organism receives information about its external or internal environment. As defined, this term can include both physiological methods of perception, involving reception of stimuli by sensory cells, and incorporeal methods of perception, which might be labeled spiritual senses—in other words, a mechanism or faculty, such as hearing, to receive and process stimuli of an incorporeal nature.

There are references in sacred scripture, as well as popular books and media, to individuals who see, hear, or even touch persons who have passed away. Such a faculty can be postulated as arising from the soul, or spiritual self, of a human being. The senses associated with the spiritual self, that is, the spiritual senses, would then allow recognition of the spiritual selves of other individuals, or receiving stimuli from them. Extra-sensory perception, or ESP, is the name often given to an ability to acquire information by means other than the five canonical senses (taste, sight, touch, smell, and hearing), or any other physical sense well-known to science (balance, proprioception, etc).

Hearing tests

Hearing can be measured by behavioral tests using an audiometer. Electrophysiological tests of hearing can provide accurate measurements of hearing thresholds even in unconscious subjects. Such tests include auditory brain stem evoked potentials (ABR), otoacoustic emissions, and electrocochleography (EchoG). Technical advances in these tests have allowed hearing screening for infants to become widespread.

Hearing underwater

Hearing threshold and the ability to localize sound sources are reduced underwater, in which the speed of sound is faster than in air. Underwater hearing is done by bone conduction, and localization of sound appears to depend on differences in amplitude detected by bone conduction (Shupak et al. 2005).

Notes

- ↑ C. Kung, "A possible unifying principle for mechanosensation," Nature 436(7051):647–654, Aug 4, 2005.

- ↑ Gallaudet Research Institute 2004-2005 Regional and National Summary Retrieved November 29, 2007.

ReferencesISBN links support NWE through referral fees

- Brugge, J. F., and M. A. Howard. 2002. Hearing. In V. Ramachandran, Encyclopedia of the Human Brain. San Diego: Academic Press. ISBN 0122272102

- Morton, C. C., and W. E. Nance. 2006. Newborn hearing screening—a silent revolution. New England Journal of Medicine 354(20): 2151-2164.

- Shupak, A., Z. Sharoni, Y. Yanir, Y. Keynan, Y. Alfie, and P. Halpern. 2005. Underwater hearing and sound localization with and without an air interface. Otology & Neurotology 26(1): 127-130.

- Vitello, P. 2006. A ring tone meant to fall on death ears. New York Times, June 12, 2006. Retrieved September 3, 2007.

| Nervous system: Sensory systems/sense | |

|---|---|

| Special senses | Visual system/Visual perception • Auditory system/Hearing • Olfactory system/Olfaction • Gustatory system/Taste |

| Somatosensory system | Nociception • Thermoreception • Vestibular system • Mechanoreception (Pressure, Vibration, Proprioception) |

| Other | Sensory receptor |

Credits

New World Encyclopedia writers and editors rewrote and completed the Wikipedia article in accordance with New World Encyclopedia standards. This article abides by terms of the Creative Commons CC-by-sa 3.0 License (CC-by-sa), which may be used and disseminated with proper attribution. Credit is due under the terms of this license that can reference both the New World Encyclopedia contributors and the selfless volunteer contributors of the Wikimedia Foundation. To cite this article click here for a list of acceptable citing formats.The history of earlier contributions by wikipedians is accessible to researchers here:

The history of this article since it was imported to New World Encyclopedia:

Note: Some restrictions may apply to use of individual images which are separately licensed.